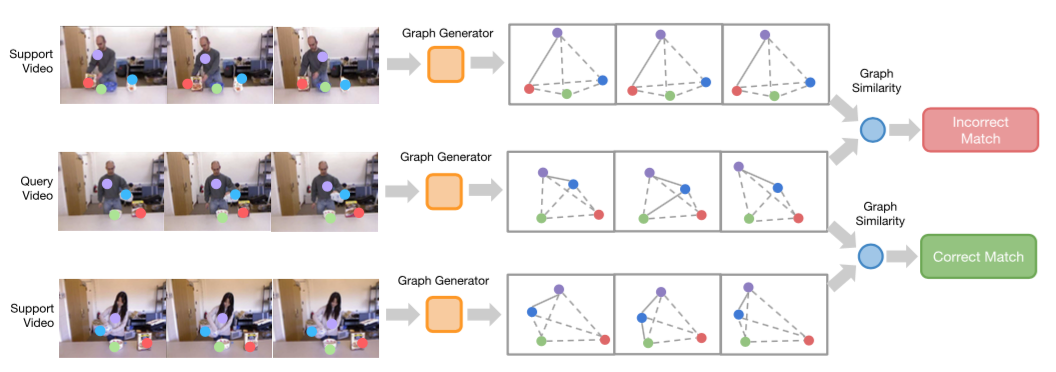

Neural Graph Matching Networks for Fewshot 3D Action Recognition

Michelle Guo, Edward Chou, De-An Huang, Shuran Song, Serena Yeung, Li Fei-Fei

Abstract

We propose Neural Graph Matching (NGM) Networks, a novel framework that can learn to recognize a previous unseen 3D action class with only a few examples. We achieve this by leveraging the inherent structure of 3D data through a graphical representation. This allows us to modularize our model and lead to strong data-efficiency in few-shot learning. More specifically, NGM Networks jointly learn a graph generator and a graph matching metric function in a end-to-end fashion to directly optimize the few-shot learning objective. We evaluate NGM on two 3D action recognition datasets, CAD-120 and PiGraphs, and show that learning to generate and match graphs both lead to significant improvement of few-shot 3D action recognition over the holistic baselines.